There are many definitions for the concept of privacy, how are they different and why is this important? I wrote the essay below as a position paper for the Workshop on Networked Privacy at CHI 2018.

In this digital world, we shed data unintentionally as we go about our daily business. Vaguely, most of us are aware that we ought to worry about this and perhaps exert some measure of control over our data activities, but this is difficult to muster in the day-to-day moments of turning on lights, sending messages or playing a silly game while waiting for the bus. Concepts of privacy and data leakage themselves are too abstract to have enough relevance in daily practice. After all, what are the practical consequences of having your location or your phone ID leaked to a third party advertiser? How can anyone be expected to care about data disclosure if it is difficult to know whether handing data to a company is something to really worry about in concrete terms? People might feel “creeped out” but this does not help anyone to clearly identify what to do (Shklovski et al., 2014).

Part of the problem is in the definitions of privacy that underpin these technical concerns. People have been debating about privacy for decades but a clear and coherent definition of what privacy might mean continues to elude. Shoshanna Zuboff (2019) argues that privacy is a collective action problem but what does that actually mean?

Popular definitions of privacy

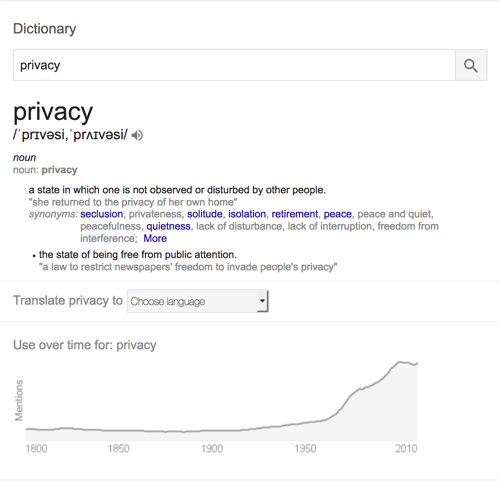

To start, it seems reasonable to use Google as the arbiter of popular truths or at least conjectures. Typing in “define: privacy” into Google returns a set of options presumably drawn from a dictionary popular with Google users perhaps similar to myself.

The first definition is “a state in which one is not observed or disturbed by other people.” The second reads “the state of being free from public attention.” These two are very close in spirit to the commonly referenced “right to be left alone” originally proposed by Judges Warren and Brandeis (1890) in their judgment about the limits of newsworthy photographs. These definitions are interesting because they offer a relational concept of privacy, defining a particular socially negotiated state of being. No statement is made about how this state might be achieved, but the very existence of these definitions points to such a state being potentially desirable.

There are fundamental values and rights at stake here, a demand for a society to afford its members a kind of dignity (Margalit, 1998). This is a rights-based framing where an individual can claim a right to be free from public attention, a right to not be observed or disturbed by other people at least in some situations or contexts. If it is a right, then it ought to be afforded to the individual by those around them, it is the responsibility of societal actors to ensure this can happen.

Wikipedia is another common arbiter of conjecture in the digital world. The privacy definition on Wikipedia is produced collectively and thus potentially representing a collective set of opinions of a broad range of Internet users or, at least, Wikipedians. Wikipedia furnishes a somewhat different definition, shifting from a passive “state of being unobserved” to a more active and internalized “ability”: “Privacy is the ability of an individual or group to seclude themselves, or information about themselves, and thereby express themselves selectively. The boundaries and content of what is considered private differ among cultures and individuals, but share common themes. When something is private to a person, it usually means that something is inherently special or sensitive to them.” Wikipedia’s definition is much more agentive, sketching the practices necessary to achieve a desired state through “seclusion” and “selective expression”. Privacy here is not a state but the ability to control access to some thing that might be “private” and that “inherently” has particular definable characteristics of “special” or “sensitive”.

So what?

The differences here are crucial and they have to do with where responsibility lies.

If privacy is ability then the responsibility for exercising that ability lies with the individual wishing to do so. Having ability is an individual characteristic and only through active exercise of such ability can it be recognized and respected. If privacy is a state then it ought to be recognized and respected as relational, socially orchestrated set of expectations.

If privacy as a state of being unobserved or undisturbed is to be respected then any observation or disturbance must be first signaled. The responsibility for the breach lies with the one disturbing.

Design considerations for privacy research, system design and policy must be different depending on the point of view, because the two definitions are clearly at odds. Let’s unpack this a little bit.

Privacy as ability

The Wikipedia definition asks how must the individual act to control access to the things they characterize as “special” or “sensitive”. The technical infrastructures of our digital lives have settled on this definition rather than the earlier Warren and Brandeis notion of being left alone. Whether through privacy-enhancing technologies or policy, we see efforts working to suggest, support, nudge or enable individuals to exercise control over their data (Wang et al., 2014). The privacy as ability view encourages thinking about how to enable this ability to flourish, perhaps through better information, education or tools. While these may get us some part of the way, they seem to inevitably fall short of the goals. If it is the internal characteristics or inherent differences that are responsible for the concerns and problems, then perhaps the solution is really to account for the differences, tweaking perceptions. After all, research suggests that the illusion of control can ensure higher levels of comfort with disclosure (Brandimarte et al. 2009) while an illusory loss of control can lead to panic (Hoadley et al 2010). Thinking of privacy as an ability removes the need to really understand the origins of concerns. Instead, this definition enables a mechanistic expectation that addressing differences will make more people comfortable with the same technologies. Yet the problems persist and their very persistence suggests that maybe this demand for individual control is not tenable. Perhaps, as some have pointed out, it is the relationships that define our data and technology interactions that might need some attention.

Privacy as a state

The original Warren and Brandeis notion of privacy as the right to be left alone insists on privacy as a relational act – of it being a normatively driven social negotiation where people must work to afford each other space and time to be left alone. If privacy is a state then how must society and its denizens act to respect someone’s state of privacy. This requires a discussion of what are the premises on which the expectations of privacy are built and what are the rights and responsibilities of all of the actors involved? We teach our children not to stare at others in the street should they happen to look odd or different and we work hard not to do so ourselves. How might we design to afford this kind of dignity? This definition demands a relational approach to thinking about privacy because on closer inspection the concept dissolves (Crabtree et al. 2017) into everyday concerns with relationship management whether among people or between people and the organizations/institutions they live with. Here the idea of empowerment towards control of personal data is moot.

Insistence on individual responsibility for data activities instead forces people to take refuge in the notion of control, despite the fact that they realize such control is illusory (Danezis & Gürses, 2010). Empowering the user to take control of their data merely shifts untenable amounts of responsibility on them for decisions about data disclosure without actually changing the nature of the relationship they have with the providers of their digital services. Yet any exchange of data is a relational act really, however fleeting. A relational act is not merely a binary choice of whether or not to reveal a bit of data, but an intricate negotiation of obligations and responsibilities given disclosures and knowledge.

Jennifer Nedelsky (1990) notes that privacy fundamentally entails relationships of respect. To me this suggests an explanation for why the inherent data promiscuity built into digital systems feels like a violation. This is because the current design of digital systems takes away from us the fundamental choice of whether or not to enter into a relationship and this feels profoundly wrong.

“To take the choice of another…to forget their concrete reality, to abstract them, to forget that you are a node in a matrix, that actions have consequences. We must not take the choice of another being. What is community but a means to…for all individuals to have…our choices.” (China Mieville, 2000 Perdido Street Station p.692.)

References

Laura Brandimarte, Alessandro Acquisti, George Loewenstein, and Linda Babcock. 2009. Privacy Concerns and Information Disclosure: An Illusion of Control Hypothesis. Retrieved February 1, 2018 from https://www.ideals.illinois.edu/handle/2142/15344

Andy Crabtree, Peter Tolmie, and Will Knight. 2017. Repacking ‘Privacy’ for a Networked World. Computer Supported Cooperative Work (CSCW) V26(4–6), 453–488. https://doi.org/10.1007/s10606-017-9276-y

George Danezis and Seda Gürses. 2010. A critical review of 10 years of Privacy Technology. The Proceedings of Surveillance Cultures: A Global Surveillance Society?

Christopher M. Hoadley, Heng Xu, Joey J. Lee, and Mary Beth Rosson. 2010. Privacy as information access and illusory control: The case of the Facebook News Feed privacy outcry. Electronic Commerce Research and Applications V9(1), 50–60.

Avishai Margalit. 1998. The Decent Society. Harvard University Press

Mieville, C. (2000). Perdido Street Station. Macmillian London UK.

Nedelsky, J. (1990). Law, Boundaries, and the Bounded Self. Representations, 30, 162–189. https://doi.org/10.2307/2928450

Irina Shklovski, Scott D. Mainwaring, Halla Hrund Skúladóttir, and Höskuldur Borgthorsson. 2014. Leakiness and Creepiness in App Space: Perceptions of Privacy and Mobile App Use. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’14), 2347–2356. https://doi.org/10.1145/2556288.2557421

Yang Wang, Pedro Giovanni Leon, Alessandro Acquisti, Lorrie Faith Cranor, Alain Forget, and Norman Sadeh. 2014. A Field Trial of Privacy Nudges for Facebook. In Proceedings of the 32Nd Annual ACM Conference on Human Factors in Computing Systems (CHI ’14), 2367–2376. https://doi.org/10.1145/2556288.2557413

Samuel D. Warren and Louis D. Brandeis. 1890. Right to Privacy. Harvard Law Review 4: 193

Zuboff, S. (2019). Surveillance capitalism and the challenge of collective action. In New Labor Forum V28(1), 10-29